This tutorial walks you through the step-by-step process of upgrading PostgreSQL to the latest version on RHEL/Rocky Linux 9 systems, including backing up your databases, adding the necessary repositories, installing the new version, migrating data, optimizing performance, and final cleanup. The guide is intended for administrators who need to upgrade their PostgreSQL database system safely and efficiently, and includes recommendations for specific use cases such as using the TimescaleDB or PostGIS extensions. After completing this guide, your PostgreSQL database will be ready for your next deployment with optimized performance and a fully updated environment.

Backup of existing database

There are two options for backing up before starting a database update. Choose the one that suits you better:

- Snapshot of a virtual machine (VM):

If you’re running PostgreSQL on a VM, creating a snapshot of the entire VM is a quick and safe way to backup. This option is particularly useful if you are using various extensions such as TimescaleDB, where the backup process is more complex and is not included in this guide. Snapshot allows you to quickly restore the entire system to its original state, which can be convenient in case of complications during an upgrade.

- Classic database backup using pg_dumpall:

This method creates a text backup of all databases on the server.

sudo -u postgres pg_dumpall > /path/to/backup/backup.sqlImportant: Make sure that the path where you are storing the backup has enough free space. It is a good idea to store the backup outside the database server (for example, on a network drive or other external storage) to protect it in case of a server failure.

Both options have their advantages, so choose the one that better suits your needs and environment.

Adding a PostgreSQL repository

To install the latest version of PostgreSQL on RHEL/Rocky Linux 9, you need to add the official PostgreSQL repository. This step will ensure that you have access to the latest PostgreSQL packages.

Download and install the PostgreSQL repository RPM package:

sudo dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-9-x86_64/pgdg-redhat-repo-latest.noarch.rpmThis package adds the official PostgreSQL repository to the system.

Disabling the default PostgreSQL module:

RHEL and Rocky Linux distributions may have the PostgreSQL module enabled by default, which may contain older versions. To ensure that a newer version of PostgreSQL is installed from the added repository, you must disable this default module:

sudo dnf -qy module disable postgresqlThis command disables older PostgreSQL modules, ensuring that the package from the added repository is used during installation.

After adding the repository and disabling the older module, you are ready to install the latest version of PostgreSQL.

Installing a new version of PostgreSQL

After adding the repository, it is time to install the new version of PostgreSQL.

Update package list:

First, update the package list to inform the system of the latest versions available in the newly added PostgreSQL repository:

sudo dnf updateInstalling a new version of PostgreSQL:

Install the specific version of PostgreSQL you want to use. For example, if you are installing PostgreSQL 15:

dnf install -y postgresql15-server postgresql15-contribThis command installs the necessary PostgreSQL server packages, including database management and initialization tools.

Database initialization:

After successful installation of the new version of PostgreSQL, it is necessary to initialize the database cluster, which creates the necessary directory structure and initialization files. Use the command to initialize:

sudo /usr/pgsql-15/bin/postgresql-15-setup initdbReplace version “15” with the corresponding number if you are installing a different version of PostgreSQL. This step is important because it prepares the environment for migrating data from the old version.

Note: It is very important that the newly initialized database has the same settings as the original database. This means that if checksums were not enabled on the old version, for example, you cannot enable them when initializing the new version. If the new cluster settings were different, pg_upgrade could fail or cause problems during data migration.

Important: In this step, do not run the new version of PostgreSQL immediately after installation and initialization. If you were to start the new version now, it could cause a port collision because the old version of PostgreSQL is still running on the same port (by default, port 5432). The new version must remain down until you complete the data migration and make any port adjustments or configure the service to run alongside the existing instance.

Checking available disk space

Before upgrading PostgreSQL, it is important to check if you have enough free disk space. Data migration can require a significant amount of space, especially if you have large databases.

Check the free disk space using the command:

df -hThis command displays a summary of disk space usage on each partition. Pay special attention to partitions where database files and PostgreSQL configuration files are located, such as /var/lib/pgsql or other specific directory where you have databases stored.

Estimate of space required:

Upgrades and migrations may temporarily require twice the space of the current size of the databases, as temporary copies and backups of data may be created during the process. So if your database is 50 GB, you should have at least another 50 GB of free space.

Exception: if you use the -k parameter with the pg_upgrade tool during migration, no copies of the data files will be made, but instead hardlinks will be used. This means you won’t need as much free disk space and the migration will be faster. On the other hand, the data will be shared between the old and new cluster, making it impossible to safely run the old version of PostgreSQL after the migration. Therefore, the old data will not be automatically backed up.

Freeing up space (optional):

If you find that you do not have enough disk space, you may want to consider the following steps:

- Delete unnecessary files and old backups.

- Moving data to external storage.

- Increase disk capacity if possible.

Adequate free disk space is crucial for a successful upgrade without service interruption or data loss.

Installing Extensions and Transferring Configuration

Before the actual data migration, it is crucial to prepare the environment for the new version of PostgreSQL. This step includes installing the necessary extensions and transferring the configuration from the old version. Proper installation and configuration ensures that the database will work the same as before after migration, including all specific settings and features.

Installing an extension

If you are using PostgreSQL extensions, such as TimescaleDB or PostGIS, it is important to install the same versions of these extensions in the new version of PostgreSQL. Using an incorrect or incompatible version of an extension can cause errors and system instability.

Finding available extensions:

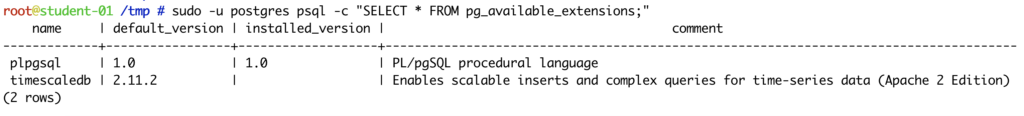

Instead of \dx, use a SQL query that lists all available extensions on the system, including those that are not yet activated:

sudo -u postgres psql -c "SELECT * FROM pg_available_extensions;"This command lists all available extensions, including their version and installation status. For example, for TimescaleDB you should see something similar:

Installing the same version of TimescaleDB into a new version of PostgreSQL:

After installing the new version of PostgreSQL, you must install the same version of TimescaleDB that you were using before. Make sure that the TimescaleDB version is compatible with the new PostgreSQL version and that it is the same as the original installation.

Example of installing a specific version of TimescaleDB:

sudo dnf install timescaledb-2-postgresql-<version>-<specific_version> timescaledb-2-loader-postgresql-<version>-<specific_version>Important: Make sure the version of TimescaleDB you are installing matches the version you were using before the upgrade to avoid incompatibilities and data migration issues.

Installation of PostGIS into the new version of PostgreSQL:

If you use PostGIS, install it in the new version of PostgreSQL in the same way as the other extensions:

sudo dnf install postgresql-<version>-postgisEnsure that the PostGIS version is compatible with the new PostgreSQL version.

Configuration transfer

After installing the extension, the next step is to transfer the configuration from the old PostgreSQL version to the new one. This will ensure that all custom settings are preserved.

Transfer the contents of the postgresql.conf file:

This file contains server settings such as memory, connection, logging, and more. Before upgrading, save a copy of this file and transfer the necessary settings after installing the new version of PostgreSQL. However, take into account new changes and features that may bring optimization in the new version of PostgreSQL.Example of transferring settings:

sudo cp /var/lib/pgsql/<old_version>/data/postgresql.conf /var/lib/pgsql/<new_version>/data/After the transfer, check the file and edit it according to the current needs and capabilities of the new version.

Transfer the rules from pg_hba.conf:

This file defines the rules for connecting to the database and authenticating users. Transfer custom rules to the new version of PostgreSQL:

sudo cp /var/lib/pgsql/<old_version>/data/pg_hba.conf /var/lib/pgsql/<new_version>/data/Again, check the file after the transfer to verify that the rules meet your security needs.

Transfer additional configuration files (if you have them):

If you use additional custom configuration files or customizations, be sure to transfer and update them in the new version.

Verification of environmental readiness

After installing the extension and transferring the configuration, verify that the new environment is ready for data migration. Verify that all extensions are installed and activated correctly and that the configuration is loaded correctly.

Now you are ready to migrate your data to the new version of PostgreSQL.

Data Migration / Upgrade

Now that the new version of PostgreSQL is installed and ready, it is time to migrate the data from the old version to the new one. This process involves moving data files from the original data directory to the new one and ensuring that all data and configurations are migrated correctly.

Stopping the old version of PostgreSQL

Before you begin the migration, it is important to stop the running instance of the old version of PostgreSQL. This step will ensure that no new data is written to the database during the migration.

sudo systemctl stop postgresql-<old_version>Replace <old_version> with the version number you are currently using (e.g. postgresql-13).

Pre-migration check using pg_upgrade –check

Before the actual data migration, it is very useful to run pg_upgrade with the –check parameter. This parameter will perform a compatibility check between the old and new database cluster without actually migrating the data. It will help you identify issues that could cause the migration to fail, and highlight settings that need to be fixed.

sudo -u postgres /usr/pgsql-<new_version>/bin/pg_upgrade -k -b /usr/pgsql-<old_version>/bin -B /usr/pgsql-<new_version>/bin -d /var/lib/pgsql/<old_version>/data -D /var/lib/pgsql/<new_version>/data --checkExplanation of parameters:

- -k: Using existing data with hardlinks, which speeds up migration and saves space.

- -b: Path to the binaries of the old version of PostgreSQL.

- -B: Path to the binaries of the new version of PostgreSQL.

- -d: Path to the data directory of the old version of PostgreSQL.

- -D: Path to the data directory of the new version of PostgreSQL.

- –check: Performs a compatibility check between the old and new cluster without performing a migration, highlighting any issues that need to be fixed before migration.

Compatibility check:

- This command checks whether the old and new versions of PostgreSQL are compatible for migration. If it detects problems such as incompatible extensions, different checksum settings, or other conflicting settings, it will notify you.

Secure authentication:

- Since –check doesn’t make any changes, you can safely run it to make sure the migration goes smoothly. If there are any problems, you can fix them before the actual migration.

Identification of settings:

- The –check parameter also helps you identify whether key settings, such as checksums, are set the same in the old and new cluster. This will prevent problems that could cause the migration to fail.

Recommendation:

Always run pg_upgrade –check before you run the actual migration with pg_upgrade to detect potential problems in advance and resolve them. This step can save you time and minimize the risk of complications during the migration.

Using pg_upgrade

The pg_upgrade tool is designed to safely and efficiently move data from an old version of PostgreSQL to a new one. Adding the -k option creates hardlinks between the old and new data files, which means that the data is not physically copied or used as a backup. This procedure greatly speeds up the migration and allows the new database to use the data immediately. If you were to try to start the old cluster after migration, PostgreSQL will detect that the data is already being used by the new version and refuse to start the old cluster, preventing potential conflicts and data corruption.

Example of the pg_upgrade command:

sudo -u postgres /usr/pgsql-<new_version>/bin/pg_upgrade -k -b /usr/pgsql-<old_version>/bin -B /usr/pgsql-<new_version>/bin -d /var/lib/pgsql/<old_version>/data -D /var/lib/pgsql/<new_version>/dataExplanation of parameters:

- -k: Using existing data with hardlinks, which speeds up migration and saves space.

- -b: Path to the binaries of the old version of PostgreSQL.

- -B: Path to the binaries of the new version of PostgreSQL.

- -d: Path to the data directory of the old version of PostgreSQL.

- -D: Path to the data directory of the new PostgreSQL version.

Replace <old_version> and <new_version> with the version numbers you are using. This command migrates the data from the original data directory to the new one, while preserving the database structure and data.

When using the pg_upgrade tool (even with the -k parameter), no data is moved from the old data directory to the new one. Instead, the data is copied or hardlinked (depending on the parameters and file system used), and the new version of PostgreSQL uses its own data directory.

How it works:

- Without the -k parameter: if you do not use the -k parameter, pg_upgrade will copy the data from the old directory (e.g. /var/lib/pgsql/12/) to the new directory (e.g. /var/lib/pgsql/15/). This means that the data is migrated to the new directory and the old directory with the data can be removed after the migration.

- With -k: When using -k with pg_upgrade, data is shared between the old and new cluster using hardlinks. This means that the old data is not physically copied, but both clusters reference the same data blocks. When you try to start the old version, PostgreSQL automatically detects that the data has already been used by another version and refuses to start the old cluster. However, the new version of PostgreSQL will run normally. So this parameter saves space and speeds up the migration, but it cannot be used as a fallback mechanism for the old version because the old cluster will not be able to be restarted.

Basic conditions for the -k parameter:

- The file system must support hardlinks: the -k parameter can only be used if you are using a file system that supports hardlinks (e.g. ext4, XFS, etc.). Hardlinks allow the new data directory to reference the same physical data blocks as the old data directory, which means that data is not copied but shared between versions.

- Unified file system: Both data directories (old and new) must be on the same file system. Hardlinks cannot reference files on different file systems.

Advantages of using the -k parameter:

- Significantly faster migration: because there is no physical copying of data when using hardlinks, migration using pg_upgrade with the -k parameter is significantly faster than standard migration. Data is not re-written to disk, which shortens the process time.

- Lower free space requirements: because hardlinks reference the same data, you don’t need as much free disk space as a full data copy. This allows you to migrate even in environments with limited free space.

Disadvantages and what to watch out for when using the -k parameter

- Sharing data between old and new cluster: when you use the -k parameter, data is hardlinked between the old and new data directory. This means that even if the data is available in two different directories (e.g. /var/lib/pgsql/12/ and /var/lib/pgsql/15/), it is the same physical data on disk. However, after the migration, PostgreSQL automatically detects that the data has already been used by another version, and the old cluster does not start at all. This avoids potential problems that could occur if both clusters were working on the same data.

- The old PostgreSQL cluster cannot be restarted: You cannot restart an old PostgreSQL cluster (e.g. PostgreSQL 12) after using the -k parameter. When trying to start the old version, PostgreSQL automatically detects that the data has already been used by another version and refuses to start the old cluster.

After successful data migration, you can start the new version of PostgreSQL. You can do this with the command:

sudo systemctl start postgresql-<new_version>This activates the new version of PostgreSQL, which now runs with the migrated data. Remember to also enable auto-start on system startup if you have not already done so:

sudo systemctl enable postgresql-<new_version>Now your new database is ready for full operation.

Database analysis and maintenance after migration

After successful data migration, it is advisable to analyze and optimize the database. This step will ensure that the database statistics are up to date and that optimal performance is achieved.

Database analysis and maintenance after migration

After migration, run the vacuumdb command, which analyzes and maintains all databases in several stages. This command will help improve database performance by optimizing internal structures after migration:

sudo -u postgres /usr/pgsql-<version>/bin/vacuumdb --all --analyze-in-stagesThis command analyzes databases in several phases, which is less resource-intensive than a complete one-time analysis.

Advantages of using vacuumdb -analyze-in-stages

This option performs the analysis in several stages, allowing faster access to valid statistics while minimizing the load on the system. This step is especially useful after a migration when statistics need to be updated to optimize performance.

Reindexation after migration

When migrating between PostgreSQL versions, especially from versions 13, 14 and 15, it is important to reindex the database. Reindexation is crucial because of changes in the structure of the B-tree indexes, which have been optimized over time. This step will ensure that your indexes are fully updated and optimized for the new version of PostgreSQL, which can significantly improve performance.

Why reindex?

- PostgreSQL 13: This version brought improvements in B-tree index algorithms, including de-duplication, which means that reindexation is recommended after migration from this version and later. The main benefits of these improvements are disk space savings and better RAM utilization, which contribute to overall database performance improvements.

- PostgreSQL 14 and 15: In these versions, further improvements have been made that affect index efficiency. Reindexing after migration from these versions will ensure that your indexes are optimized for the new features and enhancements.

How to reindex:

After a successful migration, we recommend running a reindex of the entire database:

sudo -u postgres reindexdb --allWhen reindexation is necessary:

- Migration from PostgreSQL 13 and newer: Changes to the structure of B-tree indexes mean that reindexing after migration from these versions is important for optimal performance.

This step will ensure that your indexes are fully optimized and that you are able to take full advantage of the performance enhancements that new versions of PostgreSQL bring.

Performance Tuning

After upgrading the database, it is advisable to re-examine and optimize the parameters for best performance. This is especially important if hardware configuration improvements have been made or if new features are available in the new version of PostgreSQL that may affect performance.

Memory settings

Setting memory parameters is essential for optimizing PostgreSQL performance:

- shared_buffers: this parameter specifies the amount of memory that PostgreSQL uses for shared data blocks. It is recommended to set this parameter to a value corresponding to approximately 25% of the available RAM.

- work_mem: This parameter affects the amount of memory allocated to each sorting and hashing operation. For more complex queries or multiple concurrent users, this parameter may need to be increased.

- maintenance_work_mem: This parameter specifies the amount of memory allocated for maintenance operations such as indexing and autovacuum. Increasing this value can speed up these operations, especially for larger databases.

Changes in compression:

- WAL compression (PostgreSQL 14 and later): PostgreSQL 14 adds support for compressing WAL files using the Zstandard (ZSTD) and LZ4 algorithms. This allows you to significantly reduce the size of WAL logs, resulting in disk space savings and potentially improved performance when transferring logs over the network.

- TOAST compression (PostgreSQL 15): PostgreSQL 15 introduces support for data compression in TOAST tables using the LZ4 algorithm. TOAST (The Oversized-Attribute Storage Technique) is used to store large values such as large text strings or binary data. LZ4 compression is fast and efficient, which can lead to a reduction in the size of the stored data and improved read and write performance.

Autovacuum

Autovacuum is an important process that provides database maintenance by automatically performing operations such as removing dead rows and updating statistics. After upgrading your database, you should check your autovacuum settings and adjust them if necessary to match the new performance requirements.

- Frequency and thresholds: if the database frequently makes a large number of changes, consider lowering the thresholds for triggering autovacuum to trigger it more frequently.

- Parallel running: increase the number of parallel autovacuum processes if possible, which can improve performance when maintaining a database with many tables.

Logging in

If you need to monitor performance or debug problems, adjust the logging settings. This will allow you to track queries that are slowing down the database or identify other issues:

- log_min_duration_statement: set this parameter to the amount of time the query should be logged if it takes longer than the specified value. This can help you identify slow queries.

- log_checkpoints, log_autovacuum_min_duration: these parameters can help monitor activities that can affect performance, such as checkpoints or long autovacuum operations.

Using TimescaleDB Tuner

If you use TimescaleDB, you can use their TimescaleDB Tuner tool to automatically optimize performance settings. This tool analyzes your configuration and recommends the optimal settings for parameters such as shared_buffers, work_mem, maintenance_work_mem and more.

You can run TimescaleDB Tuner by using the following command:

timescaledb-tuneThis tool will go through your current configuration and provide recommendations for adjusting the settings. In addition to basic recommendations, the tuner also allows you to set optional parameters that can further optimize database performance. We recommend reviewing these parameters and customizing them to meet the specific needs of your system and hardware environment.

Using these parameters, you can achieve optimal database settings after the upgrade and ensure that the database will run efficiently even under higher load.

Verification of functionality

After all the modifications and migrations have been made, it is important to verify that the new version of PostgreSQL is running correctly and that all data is available. This step will ensure that the migration was successful and that the database is working as expected.

Verifying the PostgreSQL version

Make sure that the new version of PostgreSQL is installed and running correctly. To do this, you can use the command:

sudo -u postgres psql -c "SELECT version();"Make sure that the version displayed matches the version you have updated to.

Database access verification

Connect to the database and check that it is available. Verify that you can connect to the database, browse tables, and perform basic queries.

Data control

Verify that all data is migrated correctly. Verify data integrity and correct configuration. You can check the key tables and data that are most important to your applications.

Verification of extensions and functionalities

Make sure that all extensions you use (e.g. TimescaleDB, PostGIS) are installed and working properly. Also check that the configuration has remained consistent with your requirements.

Performance testing

If you have made adjustments to the performance settings, verify that these changes have had a positive effect on database performance. Run a few tests to ensure that the system is running smoothly and without problems.

Checking logs

Review the PostgreSQL log files to verify that no errors occurred during the migration or after the new version was started. You can find the logs in the directory specified in the postgresql.conf settings (typically /var/lib/pgsql/<version>/data/log or similar location). Look for any warnings or errors that might indicate migration or performance issues.

sudo tail -f /var/lib/pgsql/<version>/data/log/*.logWatch the logs and check that they do not contain any serious errors or warnings.

Cleaning up after migration and important notes

After a successful migration and verification that the new version of PostgreSQL is working properly, it is important to perform a cleanup to free up disk space and remove old, no longer needed files and versions of PostgreSQL.

Delete old data files

If you used pg_upgrade, a script may be generated to remove the old data files. You can run this script with the following command:

./delete_old_cluster.shNote: This command permanently deletes the old data files, so make sure that the migration was successful and that you no longer need access to the original data.

Removing an old version of PostgreSQL

If you no longer need the old version of PostgreSQL and do not plan to use it in the future, you can remove it from the system:

dnf remove postgresql<old_version>-serverThis command removes the old version of PostgreSQL and its packages.

Note: When removing an old version of PostgreSQL, it is advisable to remove all extension packages that are associated with that version, if any. This will ensure that there are no unnecessary packages left on the system that you will no longer use.

Revision of system services

Make sure that the old version of PostgreSQL is not registered as a system service that could be inadvertently started. You can verify this with the command:

sudo systemctl list-unit-files | grep postgresqlIf you see old version services, deactivate them:

sudo systemctl disable postgresql-<old_version>Updating documentation and scripts

If you have scripts or documentation that reference an old version of PostgreSQL, be sure to update them to reference the new version. This includes backup scripts, monitoring tools, and other related configurations. Pay special attention to the following areas:

- Backup scripts: make sure that all backup scripts reference the correct version of PostgreSQL and are adapted to new features or changes that may affect the backup process (such as changes to supported commands or backup optimizations).

- Monitoring tools: update any software or tools that monitor database performance to be compatible with the new version of PostgreSQL. Make sure all monitoring metrics and alerts match the new version and its parameters.

- Automation scripts: if you use scripts to automate database administration (such as scripts for replication management, database maintenance, or deploying new features), update them to be fully compatible with the new version of PostgreSQL.

Familiarization with news and changes

Before performing a migration, it is always advisable to familiarize yourself with the new features, changes and expected behavior of the new PostgreSQL version. Recommended:

- Read the changelog: read the official PostgreSQL changelog for that version so that you are not surprised by any changes and so that you are prepared for new features, behavior changes, or removed features.

- Attend our webinars: we regularly host free webinars to inform you about what’s new in PostgreSQL and what to expect after the migration. It’s a great opportunity to learn about new features and get your questions answered by experts.

This guide should be comprehensive and cover all important aspects of upgrading PostgreSQL to RHEL/Rocky Linux 9. You can adapt it to the specific needs and environment of your organization.

Give us a Like, share us, or follow us 😍

So you don’t miss anything: